CS424 Notes, 14 March 2012

- Review of Coordinate Systems and Transformations

- We have looked at several transformations that are commonly used in OpenGL: The modeling transform, the viewing transform, and the projection transform. These transforms map points expressed in one coordinate system to another coordinate system.

- The modeling transform takes object coordinates to world coordinates and, for hierarchical graphics, can be composed of several modeling transforms that map object coordinates for simpler objects to object coordinates for more complex objects.

- The viewing transform takes world coordinates to eye coordinates, in which the camera or viewer is at the origin, looking down the negative z-axis, with the positive y-axis pointing upwards and the positive x-axis pointing right in the view. The modeling transform and the viewing transform are generally combined into a single modelview transform.

- The projection transform maps eye coordinates into clip coordinates. This defines the view volume, which is the rectangular solid (for orthogonal projection) or truncated pyramid (for perspective projection) that is actually seen in the scene.

- The modelview transform and projection transform are part of the OpenGL fixed-function pipeline. For WebGL (and OpenGL ES 2.0), you have to implement them yourself and pass the transform matrices to the shader program. Only the clip coordinates that come out of the vertex shader (from an assignment to gl_Position) have a built-in meaning for the graphics system.

- There is one more transform, the viewport transform, that is performed by the hardware between the vertex shader and the fragment shader. The result is a point in device coordinates in which the x and y coordinates are pixel coordinates and the z-coordinate is the pixel's depth value that is used in the depth test. These coordinates are available in the fragment shader as the value of the special variable gl_FragCoords. (When I tried to use texture coordinates based on gl_FragCoords, I found that in some browsers, the y coordinate in this variable increased from bottom-to-top while in others it increased from top-to-bottom.)

- Here, then is the sequence of operations:

- About the Viewport

- About the "viewport": the viewport is the rectangular area in which the scene is displayed.

For us, in all cases, it has been the HTML canvas on which the scene is displayed. However, it

is possible to set a different viewport -- presumably some subset of the canvas. You might do this,

for example, if you wanted to draw several different scenes, or several views of the same scene,

on the canvas. You would simply set up a different viewport before drawing each scene. The

viewport can be set by calling

gl.viewport(x,y,width,height);where (x,y) gives the lower left corner of the viewport and width and height give the viewport size. Note that these are integer values expressed in the pixel coordinate system of the canvas. The default setting for the viewport isgl.viewport(0,0,canvas.width,canvas.height);Note that if you ever resize a canvas after getting a WebGL drawing context for the canvas, you will have to set the viewport to match the new size, or WebGL will continue to use the previous viewport. You can find out the current viewport by callinggl.getParameter(gl.VIEWPORT)which returns an array of four numbers containing the current viewports's x, y, width, and height. (There are a lot of other values you can determine by calling gl.getParameter with various arguments.)

- About the "viewport": the viewport is the rectangular area in which the scene is displayed.

For us, in all cases, it has been the HTML canvas on which the scene is displayed. However, it

is possible to set a different viewport -- presumably some subset of the canvas. You might do this,

for example, if you wanted to draw several different scenes, or several views of the same scene,

on the canvas. You would simply set up a different viewport before drawing each scene. The

viewport can be set by calling

- Materials

- In the standard OpenGL fixed-function pipeline, a primitive can have a "material" that determines how that primitive interacts with light. (Materials are actually specified at vertices. The values for the vertices are interpolated to get material values for each pixel in the primitive.) A material in standard OpenGL is defined by a set or four properties, and the same material properties are used in other computer graphics contexts as well. You can use the same model in WebGL, although of course you have to program it yourself!

- The standard material properties are ambient color, diffuse color, specular color, emissive color, and shininess. The four three properties are colors, given as arrays of four numbers between 0.0 and 1.0. The last property, shininess, is a number between 0.0 and 128.0.

- An ambient, diffuse, or specular material color specifies what fraction of light that material reflects. In the standard lighting model, which goes along with the standard material model, there are three types of light: ambient, diffuse, and specular. Ambient color specifies how much ambient light the material reflects. Diffuse color specifies how much diffuse light the material reflects. Specular color specifies how much specular light the material reflects. Furthermore, any light in this model is a combination of red, green, and blue. A color also has red, green, and blue components. So, the red component of a color is the fraction of the red component of the light that is reflected. The blue component of a color is the fraction of the blue component of the light that is reflected. And the green component of a color is the fraction of the green component of the light that is reflected. Altogether, there are nine interactions of light with material: red, green, and blue for each of ambient, diffuse, and specular light. (As far as I know, the alpha components of material colors and lights have no purpose.)

- The emissive color is different (and is not used very often). It represents color that is not associated with any external light source. A non-black emissive color makes a surface look a little like it is glowing or giving off some light. However, it doesn't really emit light, since it doesn't illuminate any other objects in the scene. Actual light sources in OpenGL are themselves invisible. You might make an object look like a light source by giving it an emissive color and putting a light source inside the object to give off the actual illumination

- We have encountered diffuse and specular reflection previously. Diffuse light is simply light that can be reflected diffusely, and specular light is light that can be reflected specularly. Diffuse and specular color come from light sources. (It would be very unusual for the diffuse and specular colors of a light source to be different.)

- Ambient light is light that does not come from any direction and does not have an identifiable source. It is environmental light that has bounced around so many times that its source is no longer recognizable, and it illuminates everything in the scene evenly. Even an object that is shadowed from all light sources will be illuminated by ambient light. Ambient light is the reason that shadows are not perfectly black.

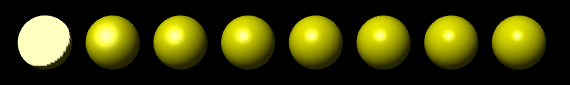

- The material property "shininess" affects the way that specular light is reflected by a surface.

Larger values of shininess produce sharper specular highlights. For example:

This picture was produced using standard OpenGL. The materials of the spheres are identical except that the shininess property increases from 0 on the left to 128 on the right.

- You should understand that this is not physics! The lighting and material model is not physically realistic. It's designed to produce results that look reasonably good, with a reasonable amount of calculuation.

- I found a table of material property values that simulate various actual materials at http://devernay.free.fr/cours/opengl/materials.html